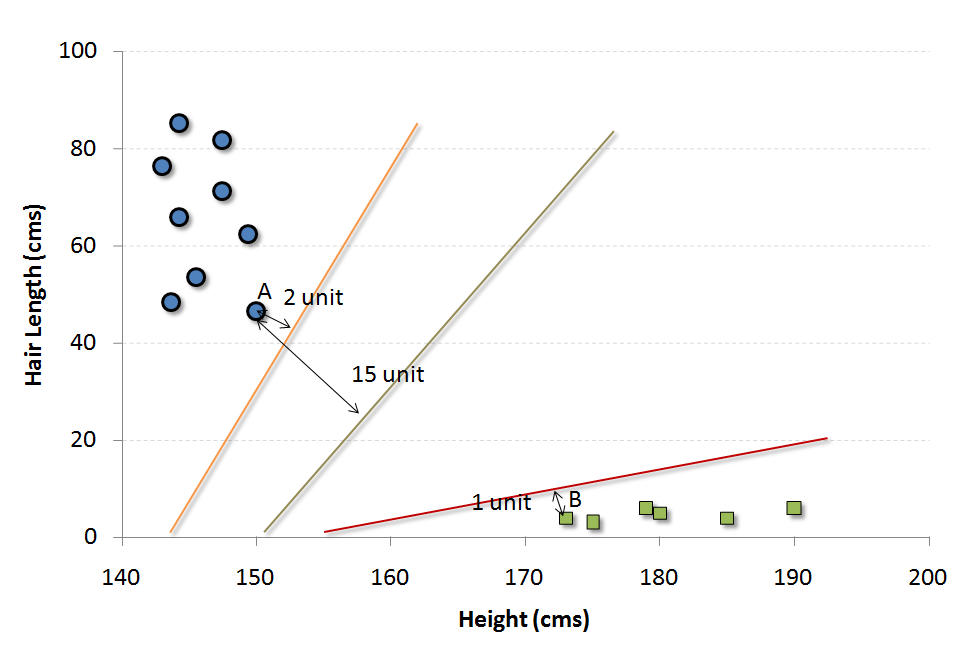

plot () Visualizing data, support vectors and decision boundaries, if provided. predict () Using this method, we obtain predictions from the model, as well as decision values from the binary classifiers. Plt.scatter(X, X, c=Y, cmap=plt.cm.Paired) The main functions in the e1071 package are: svm () Used to train SVM. A linear decision boundary can separate positive and negative examples in the transformed space.

#Svm e0171 hyperplan code

I was able to reproduce the sample code in 2-dimensions found here. SVMs Solution The idea is to transform the non linearly separable input data into another (usually higher dimensional) space.

#Svm e0171 hyperplan how to

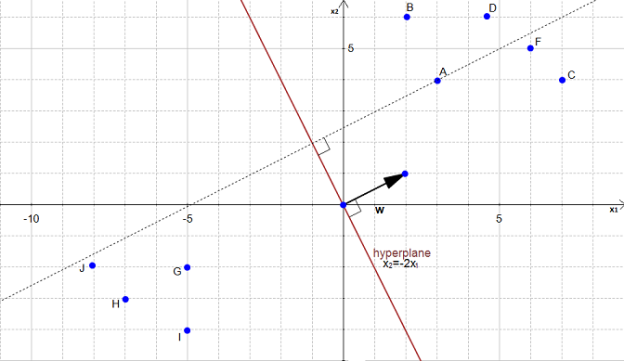

Hello, I am trying to figure out how to plot the resulting decision boundary from fitcsvm using 3 predictors. Plt.scatter(clf.support_vectors_, clf.support_vectors_, Learn more about svm, hyperplane, binary classifier, 3d plottng MATLAB. # plot the line, the points, and the nearest vectors to the plane # plot the parallels to the separating hyperplane that pass through the Gemballa porsche 996 turbo, Svm hyperplane example, Naomi garcia bastida, Demonata lord loss movie, Kosciusko remc login. Then I tried to plot as suggested on the Scikit-learn website: # get the separating hyperplane Here the classifier: from sklearn.svm import LinearSVCĬlf = LinearSVC(C=0.2).fit(X_train_tf, y_train) Step 2: You need to select two hyperplanes separating the data with no points between them Finding two hyperplanes separating some data is easy when you have a pencil and a paper.

Note that I am working with natural languages before fitting the model I extracted features with CountVectorizer and TfidfTransformer. Vyriausybes funkcijos lietuvoje, P0171 p0174 toyota sienna, Wild child alexander. Side notes: I'm fitting a model for every single customer.I am trying to plot the hyperplane for the model I trained with LinearSVC and sklearn. Svm.pred <- predict(svm.model, test, probability=TRUE) Tuned.svm <- tune.svm(train, response, probability=TRUE, That's my code # Fit model using Support Vecctor Machines SVM SVC(kernel'poly', C100, gamma0.0001) You can see a more detailed tutorial about how to tune Support Vector Machines by optimizing hyperparameters through the link below: Tuning Support Vector Machines 2- Training SVC We have the model, we have the training data now we can start training the model. It can be used to carry out general regression and classification (of nu and epsilon-type), as well as density-estimation. My question is now how I can give more recent data a higher impact? There is a 'weight' option in the svm-function but I'm not sure how to use it.Īnyone who can give me a hint? Would be much appreciated! svm is used to train a support vector machine. I believe that a purchase let's say 1 month ago is more meaningful for my prediction than a purchase 10 months ago. For example, in two-dimensional space a hyperplane is a straight line, and in three-dimensional space, a hyperplane is a two-dimensional subspace. My goal is to predict next month's purchases for every single customer. fitcsvm trains or cross-validates a support vector machine (SVM) model for one-class and two-class (binary) classification on a low-dimensional or moderate-dimensional predictor data set. What is hyperplane: If we have p-dimensional space, a hyperplane is a flat subspace with dimension p-1. For every customer I have the information if a certain item (out of ~50) was bought or not in a certain week (for 52 weeks aka 1 yr). I have a dataset containing order histories of ~1k customers over 1 year and I want to predict costumer purchases. I'm working with Support Vector Machines from the e1071 package in R.

0 kommentar(er)

0 kommentar(er)